In the rapidly evolving landscape of artificial intelligence, a new term has emerged, causing a stir in boardrooms, on job boards, and in digital cafes alike: prompt engineering. To many, it sounds like yet another highly technical discipline reserved for seasoned coders and data scientists. But what if I told you that the heart of prompt engineering has less to do with code and more to do with creativity, communication, and critical thinking? What if I told you that, even without a technical background, you likely already possess the foundational skills to be an effective prompt engineer?

What is Prompt Engineering?

Imagine you have an incredibly talented, but very literal, personal assistant. This assistant has access to the sum of human knowledge, can write in any style imaginable, create stunning images, and solve complex problems. There’s a catch, though: it will only do exactly what you tell it to do. Vague instructions lead to vague results. Unclear requests yield confusing output.

In essence, artificial intelligence, particularly large language models (LLMs) like OpenAI's GPT-4o or Google's Gemini, is this brilliant yet literal assistant. And prompt engineering is the art and science of crafting the instructions—the "prompts"—that guide this assistant to deliver precisely what you need.

It’s a dialogue, a conversation between human and machine. The prompt is the opening line of that dialogue. It can be a simple question, a complex command, an incomplete statement, or even an example of what you want to see. A well-crafted prompt is the difference between AI-generated gibberish and an AI-generated masterpiece. It’s the bridge between human intent and machine execution. It’s less about programming and more about communicating with clarity, context, and creativity.

Why is Prompt Engineering Important?

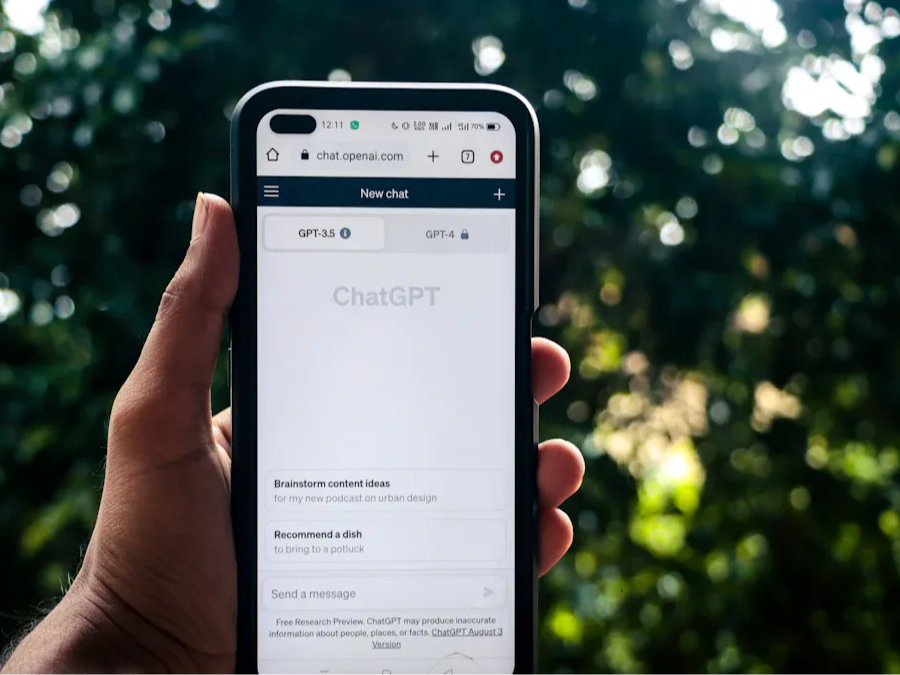

The performance of AI tools like ChatGPT depends heavily on the input they receive. Even the most advanced model can give mediocre results if you ask vague questions.

Here’s why prompt engineering is a big deal:

- It unlocks the AI’s full potential. Well-crafted prompts lead to better answers, more relevance, and higher-quality responses.

- It saves time. Instead of refining or editing bad outputs repeatedly, a strong prompt can deliver spot-on answers from the get-go.

- It’s widely applicable. From writing marketing copy to coding assistance, prompt engineering is useful across industries.

As AI tools get more capable, the person who can best communicate with them becomes more valuable.

Why Prompt Engineering Isn't Strictly for Technical People?

Contrary to popular belief, you don’t need to be a programmer or AI expert to master prompt engineering.

In fact, non-technical people often excel at it because the core of prompt engineering is effective communication, instruction, and creativity.

Here’s why anyone can learn prompt engineering:

- It’s language-based, not code-based.

- Everyday writing skills apply. If you’ve written an email, a to-do list, or a school essay, you already have foundational skills.

- Empathy matters. Understanding your audience—whether human or machine—is crucial for crafting useful prompts.

Whether you're in marketing, education, HR, or customer service, prompt engineering can enhance your work with no code involved.

Tried-and-True Techniques to Boost Your Prompting Game

To get better at prompt engineering, you need to learn a few simple techniques. Here are some powerful ones:

1. Be Specific and Clear

Bad Prompt: “Write something about climate change.”

Good Prompt: “Write a 300-word summary explaining climate change causes and effects, using a tone suitable for high school students.”

2. Set Context and Role

Prompt: “Act as a professional resume coach. Rewrite the following bullet point to be more action-driven and concise.”

3. Use Examples (Few-shot prompting)

Provide examples to guide the AI’s output.

Prompt: “Here are three examples of funny tweets. Based on this style, write five new tweets about coffee.”

4. Request Format and Style

Prompt: “List 5 productivity tips in bullet format, each under 20 words.”

These techniques aren't difficult, but applying them consistently makes all the difference.

5 Non-Tech Prompt Engineering Skills (That You Probably Already Have)

You don’t need a background in computer science to become skilled at prompt engineering. In fact, many of the abilities you use every day—often without realizing—can help you write more effective prompts. Here’s how five non-technical skills translate directly into prompt engineering success:

1. Clear Communication

Being able to explain something in simple, straightforward language is one of the most important prompt engineering skills. If you’ve ever written an email, texted a friend, or given instructions to a coworker, you’re already using this skill. When you craft a prompt, your goal is to eliminate ambiguity and tell the AI exactly what you want.

Example: Instead of “Explain Bitcoin,” write “Explain how Bitcoin works in 100 words using simple analogies for someone new to cryptocurrency.”

2. Critical Thinking

Prompt engineering requires iteration. You ask a question, assess the result, and decide if it meets your needs. If not, you adjust the input. That’s problem-solving in action—breaking down what went wrong and improving your query. This type of critical thinking is common in roles like teaching, management, and consulting.

You’re essentially debugging language, not code.

3. Empathy

This might sound unexpected, but empathy is powerful in prompt engineering. When you consider how your prompt will be interpreted—not just by people, but by an AI—you naturally write more thoughtful and clearer instructions. If you understand the tone, format, or complexity your audience expects, you can shape prompts to reflect that need.

“Act like a patient middle-school science teacher” is more effective than just “Explain photosynthesis.”

4. Creativity

Thinking outside the box helps you reframe questions, test alternative phrasings, and invent imaginative scenarios for the AI to explore. This is especially useful for writers, educators, marketers, and even students.

Creative prompts include things like: “Write a poem about teamwork in the voice of a pirate.”

5. Problem Solving

Many complex prompts require breaking down tasks into smaller steps—a method called chain-of-thought prompting. It’s like outlining a plan: you think logically about what comes first, next, and last. This mirrors everyday problem-solving in planning events, setting goals, or organizing projects.

Prompt: “Solve this math problem step-by-step, explaining your reasoning after each step.”

Prompt Engineering Tips and Best Practices

Becoming a better prompt engineer isn’t just about theory—it’s about practice. Here are some expert-backed tips to help you refine your prompting technique:

1. Start Simple, Then Iterate

Don’t overcomplicate your prompt from the beginning. Start with a basic version and test the results. Then adjust based on what the AI gives you. This process of iterative refinement helps you understand what works and what doesn’t.

Tip: Keep a notebook or digital document of prompt revisions to track what changes improved results.

2. Be Clear About Instructions, Format, and Tone

Good prompts provide not just the “what,” but also the “how.” If you want bullet points, say so. Want a formal tone? Specify it. Want a funny tweet? Make that clear. The more you guide the AI, the better the output.

Example: “Summarize this in bullet points, each under 20 words, using a friendly tone.”

3. Use Prompt Templates

Prompt templates are reusable frameworks. They save time and standardize results. For example:

For summaries: “Summarize the following text in 5 bullet points.”

For translation: “Translate the following to [language] using informal tone.”

For creativity: “Write a story that begins with ‘It was a rainy Tuesday morning...’ and ends in a twist.”

Bonus Tip: Save your most effective prompts in a spreadsheet or notes app.

4. Assign Roles to the AI

Role-based prompting helps the model assume a perspective. You can say, “Act as a senior software engineer,” or “Pretend you’re a financial advisor for retirees.” This creates richer, more context-aware responses.

Role prompt: “You are a nutritionist. Recommend a weekly meal plan for someone with type 2 diabetes.”

5. Limit Length and Scope When Needed

Language models may generate too much or too little content. You can manage this by specifying length (“In under 150 words”), time frame (“Using only events from the past 5 years”), or complexity (“Explain this at a 5th-grade reading level”).

6. Experiment Frequently

Prompt engineering is not static. What works today may change as models improve. Keep experimenting and testing different phrasing, constraints, and structures.

Curiosity is your superpower here.

Use What You Know—And Let AI Do the Rest

The best part about prompt engineering? You already have what it takes.

Whether you’re a clear communicator, a creative thinker, or someone who solves problems with ease—those everyday talents are the exact tools you need to thrive in this space. You don’t need a degree in AI or years of programming experience. You just need curiosity, a willingness to experiment, and the confidence to bring your unique voice into the conversation with machines.

As AI becomes more accessible and embedded into daily life, prompt engineering is no longer a niche skill—it’s a superpower for anyone who wants to think better, write faster, and solve smarter. So don’t underestimate what you already bring to the table.

Your words have more power than you think. Start using them.

FAQs About Prompt Engineering

1. What is Prompt Engineering in simple terms?

Prompt Engineering is designing smart inputs to get useful outputs from AI models.

2. Do I need to know coding to become a Prompt Engineer?

Not necessarily. Creative writing and critical thinking are just as important.

3. Is Prompt Engineering a real job?

Yes! It’s a fast-growing profession with high-paying opportunities in 2025.

4. What tools do Prompt Engineers use?

OpenAI Playground, FlowGPT, Notion AI, and others.

5. How can I learn Prompt Engineering for free?

Check OpenAI’s docs, GitHub resources, and YouTube tutorials.

6. Can Prompt Engineering be automated?

Partially, but human creativity and intent are hard to replace.